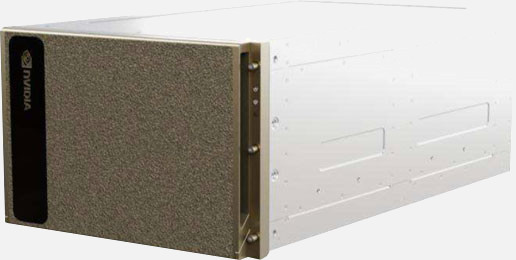

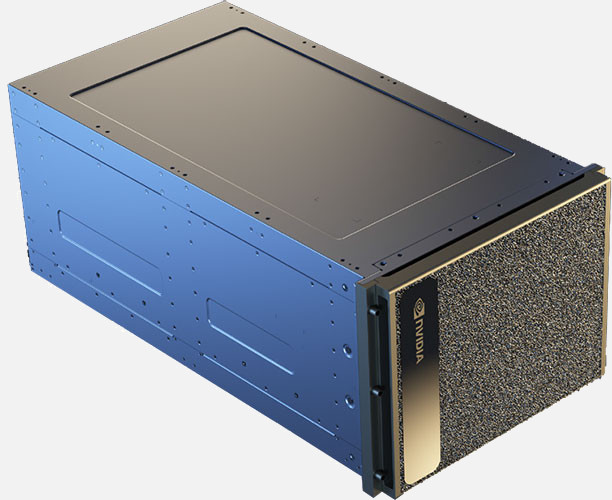

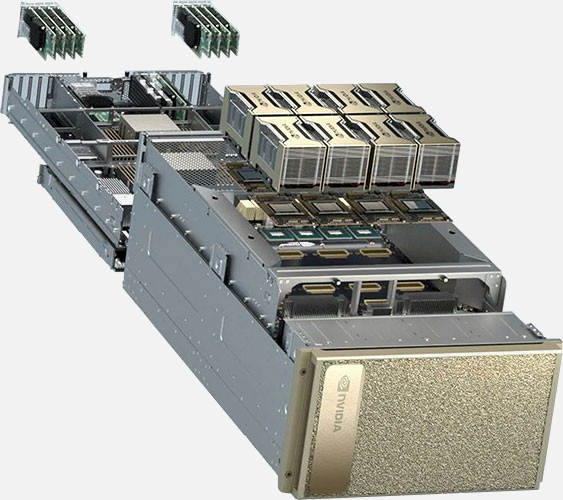

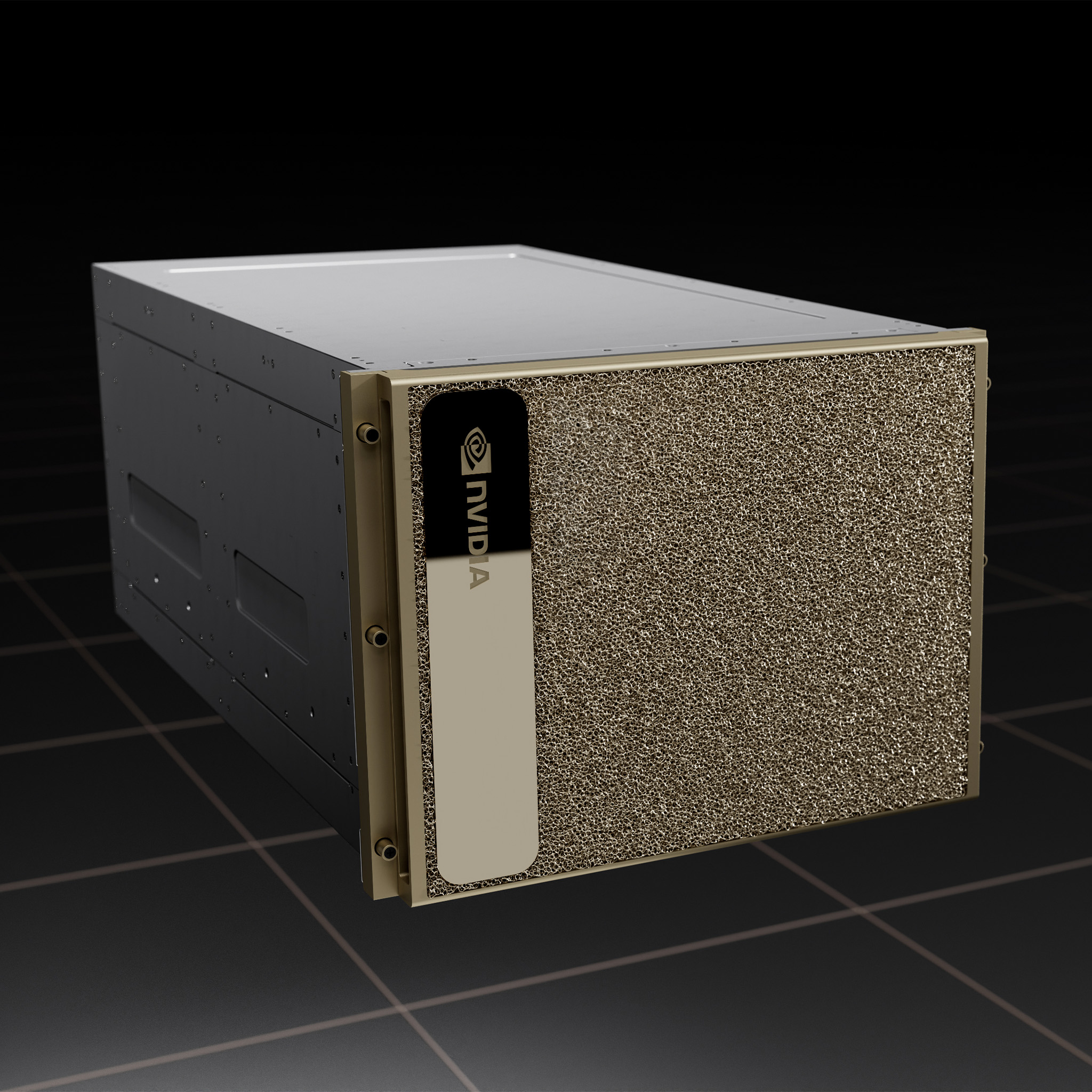

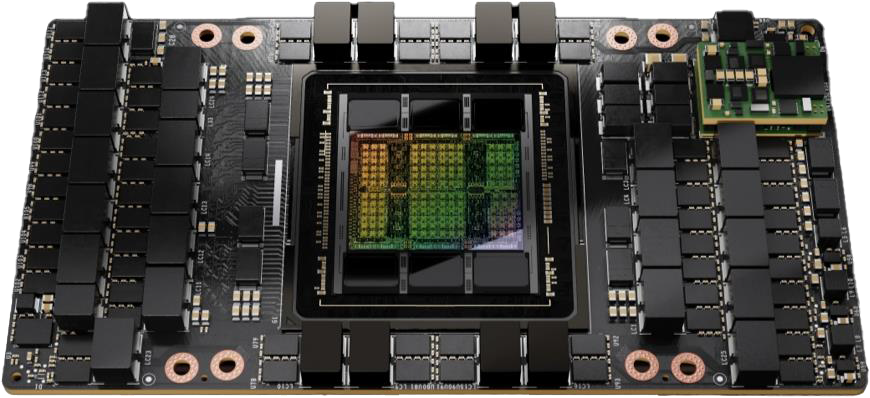

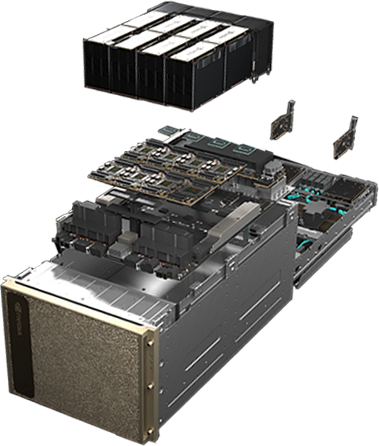

The NVIDIA DGX H100 is a 8U rackmount server configurable with 0x Intel Xeon Scalable Processor Gen 4/5 series range of processors. Built using the latest enterprise-class server technology, the NVIDIA DGX H100 has 0x NVMe and M.2 Fixed Drives and is ideal for those requiring a combination of high performance and density with its 0x memory banks providing up to 0GB of high-performance server memory. The NVIDIA DGX H100 boasts as just a few of its core features.

The NVIDIA DGX H100 rackmount server with 0 fixed hard drives offers a cheaper alternative to servers with hot-swappable drives, bringing the total system cost down when hot-swappable hard drives are not required.

The NVIDIA DGX H100 rackmount server with 0 fixed hard drives offers a cheaper alternative to servers with hot-swappable drives, bringing the total system cost down when hot-swappable hard drives are not required.

Storage Technology

Originally designed and utilised in mobile applications such as laptops and notebooks, 2.5" hard drives are becoming ever increasingly used in servers due to their lower power consumption and space-saving characteristics.

The NVIDIA DGX H100 rackmount server with 0 fixed hard drives offers a cheaper alternative to servers with hot-swappable drives, bringing the total system cost down when hot-swappable hard drives are not required.

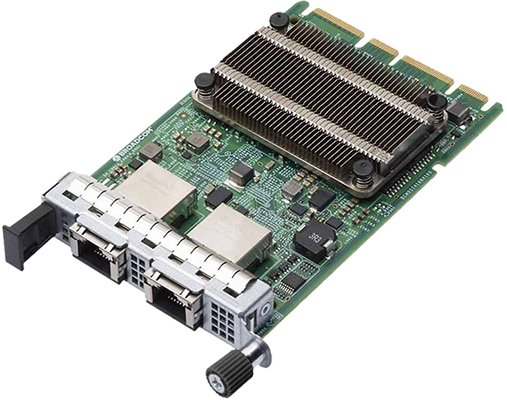

Configure for your Network

The NVIDIA DGX H100’s ready to be deployed into your network environment with a wide-range of high-throughput connectivity options.

Configure the NVIDIA DGX H100 to match your application I/O demands and network infrastructure with up to 0x Broadcom Ethernet Adaptors.

Whether your application demands the highest networking throughput, or you’re looking for a more modest enterprise-grade networking option such as Gb/E, the NVIDIA DGX H100 is totally customisable with a wide range of robust I/O connectivity options.

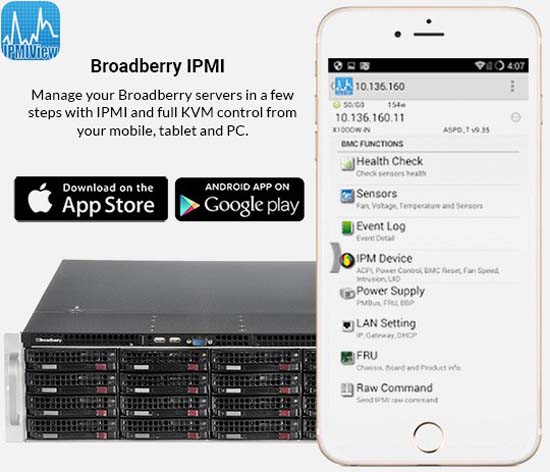

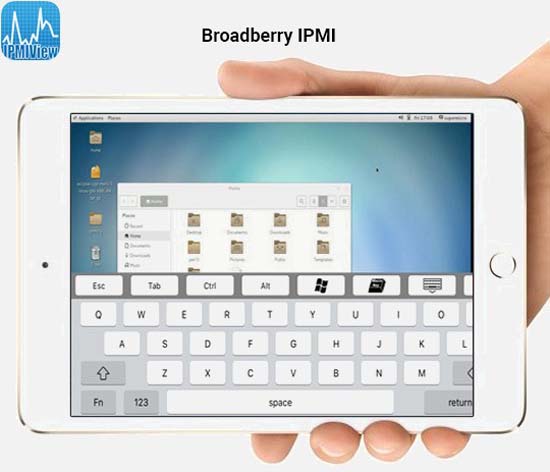

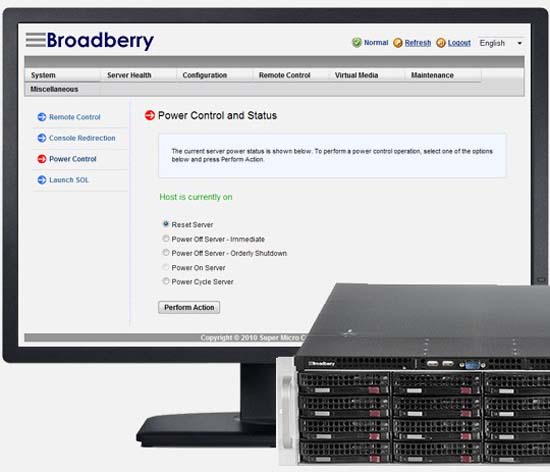

IPMI 2.0 & KVM over IP

Whereas most server manufacturers charge ongoing licence fees for IPMI, powerful, industry-standard server management is built in to the NVIDIA DGX H100 at no extra cost.

Using the dedicated 3rd LAN port or on-board Gigabit Ethernet ports, you can take full control of the server as though you were standing in front of it through your web browser from anywhere in the world through a pre-defined IP address and password.

Check the health of the NVIDIA DGX H100 components, fan speeds, temperatures, update firmware, check logs & set SMTP alerts for you pre-configure thresholds, and list the health of all your servers on one simple screen.Â

The NVIDIA DGX H100 IPMI feature also allows complete Keyboard Video and Mouse (KVM) control, and send CD/DVD Images over IP to install software & operating systems remotely.

Expand with PCI-Express Expansion

Configure the NVIDIA DGX H100 with up to 0x Broadcom Ethernet Adaptors. With a wide range of enterprise-class PCI-Express add-on options available, the NVIDIA DGX H100 is a flexible platform for a wide-range of applications.

The NVIDIA DGX H100 rackmount server with 0 fixed hard drives offers a cheaper alternative to servers with hot-swappable drives, bringing the total system cost down when hot-swappable hard drives are not required.

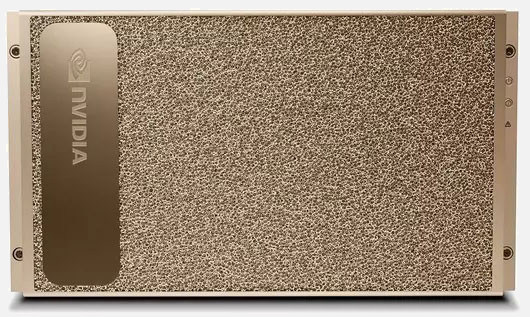

NVIDIA DGX H100®

NVIDIA DGX H100®

Artificial Intelligence Server for HMRC Fraud Detection

Artificial Intelligence Server for HMRC Fraud Detection  Ultra-Performance AI Server for Samsung

Ultra-Performance AI Server for Samsung

Call Our US Sales Team Now

Call Our US Sales Team Now Due to the height of this server a PCI-Express card will not physically fit unless you use a riser card.

Due to the height of this server a PCI-Express card will not physically fit unless you use a riser card.